Cyber threats are becoming more advanced at an extraordinary rate across all areas, including both quantity and complexity. Malware authors utilize machine learning and AI techniques to evade detection, making it difficult for traditional antivirus systems to detect these types of threats. The advent of AI for malware detection has changed the landscape due to its ability to predict, analyse, and prevent potential attacks before they inflict serious harm. AI utilises intelligent behaviour analysis and sophisticated pattern identification to allow security systems to detect previously known and new malware as it occurs in real time.

As bad actors evolve, so too must defenders. AI-driven security systems provide defenders with the opportunity to keep pace with evolving threats.

Why Traditional Malware Detection Is No Longer Enough

Traditional methods of AI for malware detection are primarily based on the identification of specific signatures associated with malicious software. Malware is created in many different ways by modern-day hackers, and most employ the use of polymorphic, metamorphic, and obfuscation techniques to conceal their signature from being flagged when vulnerability scanned against existing definitions.

Because most malware programs actively exploit unknown vulnerabilities (often referred to as ‘zero-day’), when attackers discover them, there will be no matching signature to identify them. The bottom line is that, as a result of having static definitions, security systems create many blind spots where malware can be missed.

The speed of attack today is much faster than can be analysed using manual processes. Therefore, outdated methods of detecting malware based solely on signatures cannot keep up with the pace of evolving malware threats.

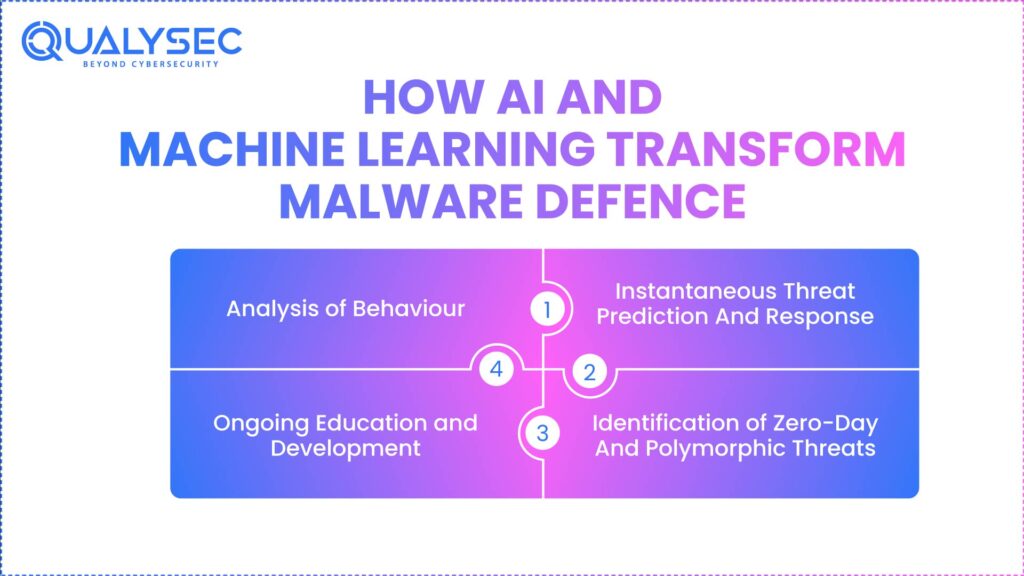

How AI and Machine Learning Transform Malware Defence

The use of AI and machine learning has changed the way that security teams detect and respond to malicious software (also known as “malware”). In AI and machine learning penetration testing, rather than focusing on a single code pattern, as was the case before, many of these systems are now analysing malware by looking for certain types of behaviour, patterns of activity, and anomalies in real time.

In doing so, these systems can identify new or mutated versions of malware because of their ability to learn from large data sets. AI can learn, develop, and adapt as new techniques for attacking computers and networks emerge. This ability continues to add value to AI as a core component of today’s malware prevention systems. Learn about AI network security.

1. Analysis of Behaviour

Rather than merely checking code signatures, behaviour analysis detects malware based on how the application works. It does this by observing system calls, file behaviour, and network activity to determine whether or not an application’s activity is typical. This enables the detection of malware even if it has been encrypted or obscured in its code. Behavioral analysis provides security teams with a more accurate security assessment of the malware threat.

2. Instantaneous Threat Prediction And Response

Machine learning tools can assess threats and respond immediately and automatically without needing to wait for someone to do it for them. They will automatically block, quarantine, or isolate rogue activity based on their assessment of the activity as high, medium, or low risk based on probability scores. This reduces the time an attacker has to penetrate an organisation’s environment. Instantaneous threat assessment limits the potential impact of threats that are gaining momentum.

3. Identification of Zero-Day And Polymorphic Threats

The plethora of malicious activities that artificial intelligence learns from helps it detect a brand-new attack. Polymorphic malware has an ever-changing signature, but by studying behavioural patterns, artificial intelligence can detect the intent behind the malware. This means that even if the polymorphic malware looks entirely new, the model will still catch it based on its behaviour patterns. This means that zero-day threat detection has an increased reliability factor.

4. Ongoing Education and Development

Through continuous analysis of new threat data and input provided by analysts, AI Models are always able to learn, improve, and grow. Therefore, when cybercriminals adapt in their offensive operations, AI continually updates its ‘version’ of what ‘maliciousness’ resembles, resulting in very high accuracy in the detection of cyber threats over time. As well as ensuring that they remain effective against the next wave of technologically sophisticated cyberthreats.

Download a sample report to know how AI and Machine Learning are revolutionizing malware protection.

Get a Free Sample Pentest Report

Core ML Techniques Used in Modern Malware Detection

Malware is often detected nowadays through numerous methods of machine learning, with a primary focus on behaviour and patterns of the system. To this end, deep learning models (CNNs) detect hidden malicious code (binary structure) and LSTMs/RNNs detect behavioural anomalies using a sequence of actions over a period of time.

Additionally, conventional machine learning methods are also valuable in identifying lightweight malware using both SVMs and decision trees. Furthermore, both conventional and deep learning approaches can yield higher accuracy rates and reduce the number of false-positive notifications from every malware detection system that has been developed to date.

You might like to know about Machine Learning in Cybersecurity.

How AI Identifies Unknown and Polymorphic Malware

The ability of AI to detect previously unseen malware is based on its ability to identify behaviours instead of just relying on signature-based detection. The signature of polymorphic malware is constantly changing. However, the associated actions taken by polymorphic malware are frequently based upon stereotypical patterns.

By using machine learning models to track sequences of actions that are involved in the creation of the malware. AI security tools can identify patterns of interaction and other anomalies of activity that can indicate the presence of new malware. Machine learning enables the tools that detect new malware to do so with a high degree of accuracy. This capability enables the detection of rapidly evolving stealthy types of malware.

1. Feature Abstraction:

AI extracts Features of meaningfulness through the system’s interactions, such as API Calls or Memory actions. These features remain constant through code mutation changes of Malware. This approach is based on the intent of attack over the structure of malware. Therefore, AI captures threats that are missed by signatures, including critical AI security vulnerabilities. This contributes to the improvement of complex/mature variant detections of Malicious Software.

2. Temporal and Sequential Modelling of Malware Behaviour:

Malware operates over specific time intervals, and AI can capture multiple time sequences through LSTM-based models that indicate how malware and applications communicate with device users. After the code mutates, the Sequence of actions shows the communication of users or Malware (in which Malware may be based on a user’s profile). In addition, this allows for the detection of present-day polymorphic threats.

3. Adaptation to Concept Drift:

The design of an AI model provides a cybersecurity risk assessment framework that supports continuous training based on the evolution of malware patterns. Continuous retraining allows for the maintenance of model Accuracy and Performance, therefore preventing the chance of becoming obsolete. Ongoing Concept drift management aids in maintaining high detection rates.

4. Trust and Explainability

The AI platform explains reasons for flagging an action as malicious. Analysts can see which behaviour or pattern caused the information to be flagged. Transparency allows for more refinement of the detection models and decreased false positives. Transparency also allows for creating trust in AI technology.

Contact us today to speak with our experts and discover how AI can strengthen your malware defence.

Talk to our Cybersecurity Expert to discuss your specific needs and how we can help your business.

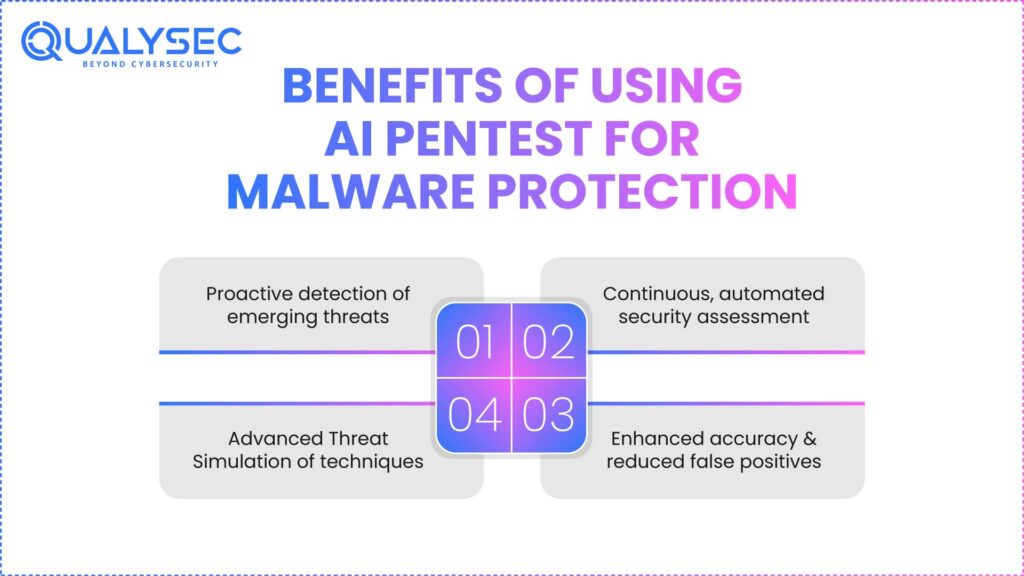

Benefits of Using AI Pentest for Malware Protection

An AI-powered Penetration Testing (AI Pentest) solution improves the ability of an organisation to find malware threats faster and with more accuracy than manual (human-based) testing. AI Pentest continuously learns threat patterns and creates a simulation of possible future attacks as a form of artificial intelligence (AI).

By using these capabilities, organisations can ensure that security teams have the means to proactively strengthen their defences. Providing faster detection times, reducing workloads on analysts, and enabling deeper penetration into unknown vulnerabilities throughout an organisation’s digital assets achieves this.

Proactive detection of emerging threats:

The AI Pentest identifies emerging threats based upon previously unidentified behaviours of malware by analysing massive amounts of data in real-time. By using this capability, AI cybersecurity can analyse all known types of malware based on several different Code Anomalies and Traffic Deviations.

The AI-Driven Pentesting technology provides time to react prior to the threat becoming critical. This capability gives organisations a more robust defence against fast-paced attacks.

Continuous, automated security assessment:

Unlike more traditional Penetration Testing, which testers perform periodically, AI Pentesting operates 24 hours a day and continually scans a network for security holes that new devices, application installations, configuration changes, and Shadow App installations may create.

AI helps to reduce reliance on a Human Schedule and allows no lapses in security coverage for the entire time. This continued validation of the effectiveness of security controls improves an organisation’s ability to stay Ready for Malicious Attacks and to significantly reduce its exposure periods.

In addition, AI secures computer-assisted automation seamlessly in larger Hybrid Organisations where multiple Cloud Providers are supported. Read about AI Cloud Security.

Enhanced accuracy and reduced false positives:

By utilising historical threat activity and analyst feedback to evolve detection rules, Machine Learning models will improve the level of accuracy and decrease the number of false positives generated by the alerts sent to Security Operations Centre (SOC) teams.

This will result in fewer alert events, allowing Security Analysts to concentrate on analysing alerts that represent an actual threat. Organisations develop an efficient and accurate detection system over a period of time. They will have the ability to use their Security Analysts’ time efficiently by eliminating redundant, non-threat alerts.

Advanced Threat Simulation of techniques:

The use of AI pentesting tools can simulate various sophisticated, advanced attacker techniques, such as polymorphic malware, lateral movement, and attempts to exploit zero-day vulnerabilities.

By using these simulations, researchers can test malware to determine whether it can bypass different layers of defence, using the same techniques as in a real-world environment. By utilising the intelligence learned from these attacks, engineers will have the ability to create patches before the implementation of an advanced attack technique.

Organisations that utilise these simulations will be in a better position to respond to advanced criminal threats and will have the advantage of understanding and analysing the methods employed by their adversaries.

Explore how our AI-Powered security services have delivered measurable results for businesses – Read Client Testimonials.

See Why Companies Worldwide Trust Us

Challenges and Limitations of AI for Malware Detection

The transformative nature of AI for malware detection brings along its own set of challenges. AI-based antivirus models depend heavily on the quality of data used to train them, require large resources to compute, and face susceptibility to manipulation through adversarial inputs.

Moreover, it is often the case that AI models produce results that cannot be explained, and security teams have no way to accept the validity of or back up the decisions made by AI models. Companies need to know these limitations to use AI responsibly and mitigate the risks involved, and effectively leverage AI threat intelligence.

Reliance upon high-quality and diverse sources of training data:

The performance of AI models is dependent on the quality of the data used to train them. The use of incomplete, unbalanced, and outdated datasets may result in the AI models having detection gaps and misclassifying new strains of malware.

Threat actors can take advantage of these weaknesses by creating payloads that simulate behaviours that were not well represented. Companies must regularly curate and maintain their datasets, which takes time and is costly. If a company fails to regularly update its datasets, the detection tool will be inaccurate and unreliable.

Vulnerability to adversarial manipulation:

Increasingly, threat actors are taking advantage of weaknesses in AI models and utilising adversarial approaches to deceive AI models into misclassifying malware by adding slight variations to malware samples to get around automated response and detection methods. Adversarial methods reduce the trustworthiness of fully algorithmic defences.

When threat actors present AI models with clever obfuscation techniques, the AI models can misclassify a harmful piece of code as not being harmful. The continued use of adversarial approaches creates an ongoing issue that necessitates the association of AI models with strong cyber threat intelligence and human analysis.

The expense of high computing and operational overhead:

Enterprise-level deployments of advanced, machine learning-based behavioural analysis tools require considerable processing and memory resources to operate. This can result in high costs for companies with geographically diverse critical infrastructure.

As well as costing a lot of money, the increased load on the infrastructure can occur during peak usage times with heavy workloads. It can also happen when examining large amounts of endpoint data. This increased load presents a higher likelihood of encountering performance bottlenecks. Therefore, it is imperative that organisations are able to effectively manage and optimise these resources to enable the long-term sustainability of the deployment.

Poor explainability/transparency of the AI decision-making process:

Many AI-driven detection systems function as ‘Black Boxes’ that do not provide transparency to analysts regarding why the system has flagged or ignored an object. The inability to explain why the system flagged or ignored an object makes it more challenging for analysts to respond effectively to incidents and also adds greater complexity to compliance reporting processes.

Security teams may have difficulty if they cannot validate the automated conclusions the AI system makes, and this is further compounded by their difficulty in identifying the root causes of false positives or negatives. This lack of insight may be a barrier to adoption by companies in regulated industries.

Overreliance on automation:

Sometimes organisations may wrongly assume that the AI technology will be able to replace human judgment. Consequently, this leads to an erosion of the level of oversight that is provided, and may provide a pathway for subtle or sophisticated forms of threat to bypass detection because the models may have never previously encountered that specific type of cyberattack.

It is paramount that organisations maintain a balanced approach to the use of AI by integrating the insights derived from AI technology with the experience and insights of a human security analyst. If we do not maintain this balance, we may see a gradual degradation in both detection accuracy and the effectiveness of strategic threat assessments over time.

Explore Our Detailed Case Studies to See How Businesses Successfully Overcame Cybersecurity Challenges.

See How We Helped Businesses Stay Secure

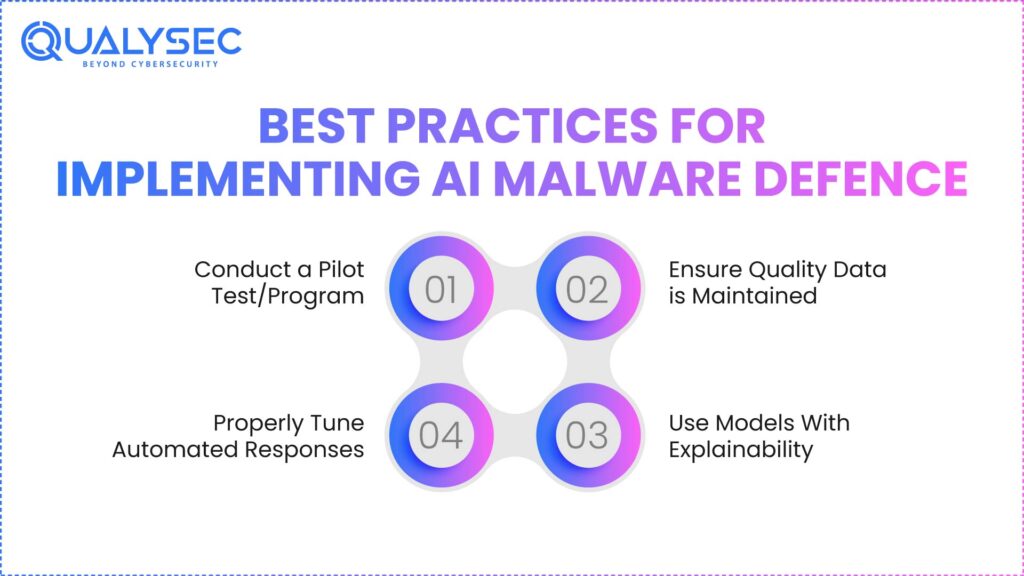

Best Practices for Implementing AI Malware Defence

Organisations should develop a sound plan for AI-based malware detection implementation, with due diligence on data quality and oversight. Proper integration into existing technology is critical for the best results from an AI security solution, as is training your employees to accurately interpret insights and validate actions made as a result of AI technology.

Utilising best practice methodologies will increase the accuracy and longevity of your investment in security using AI, ultimately allowing you to receive maximum value from an AI investment.

1. Conduct a Pilot Test/Program

Before implementing AI technologies, organisations should conduct pilot programs in a controlled setting. This allows for identifying any strengths, weaknesses, or issues related to the deployment of AI, as well as validating detection accuracy. Early pilot testing will allow for smoother overall deployment.

2. Ensure Quality Data is Maintained

Quality data ensures that models are trained properly and reduces false positives. The organisation must maintain an updated and accurate threat intelligence feed, with the inclusion of diverse attack sample types. Quality data is the foundation upon which any AI security model and AI security testing will be built.

3. Use Models With Explainability

Tools with clear processes make it easier for analysts to trust your system, as well as support compliance and auditing. Explainable AI also supports more informed decision-making, reduces confusion, and makes it easier to respond effectively.

4. Properly Tune Automated Responses

Automation is supposed to help humans make better-informed decisions, not replace their judgment. Therefore, organisations should set guidelines for automated responses that include the severity of incidents. Organisations will still need human input before performing critical actions and thus need to use the proper tuning of their automated response process to minimise workflow disruptions.

Learn the latest cybersecurity best practices to protect your business today.

How Penetration Testing Enhances AI Malware Protection

Penetration testing services enhance the security of AI by identifying vulnerabilities while simultaneously providing organisations with high-quality data to train their models. Pentesters perform simulated attacks that reflect types of large-scale or targeted cyberattacks that real threat actors could perform.

By using penetration testing to train AI systems, organisations have access to information to ensure that their security models are functioning as intended. It can also identify any potential weaknesses and develop strategies to strengthen them. When combined, penetration testing and artificial intelligence create an effective and dynamic security paradigm for organisations.

1. Simulating Actual Attack Scenarios

The process of pentesting mimics how even the most sophisticated attackers actually attack (advanced threat techniques) to see if an AI system can detect them. Additionally, this simulation process exposes any “blind” areas of an AI detection model and assesses how the AI will respond to incidents of high intensity (pressure). All of this together results in increased overall protection against advanced techniques.

2. Generating Very Valuable Training Data

Pentesting produces valuable (as opposed to waste-of-space) labelled data for the AI to utilise when training. Examples of what this includes are: instructions (or messages) sent by the operating system to other parts, memory activity, and characteristics of a defined behaviour. The more realistic the data is when training, the more accurate the pentesting AI will be when detecting new types of threats.

3. Verifying Incident Response

The organisation that conducts pentesting can evaluate the accuracy of the automated responses it creates during tests. This helps ensure that the processes used for containment trigger correctly and identify weaknesses where the response must be monitored by personnel. By verifying incident response processes, an organisation’s readiness for adverse events (attacks on its organisation) will be stronger.

4. Enhancing Model Accuracy Over Time

Pentest Results inform model updates. This provides an ongoing feedback loop to increase detections. With every cycle, the resiliency of the system increases. As such, there is a significant improvement in overall long-term accuracy.

Explore AI for Cyber Attack Prevention. It Stop Threats Before They Happen.

Conclusion

AI for malware detection is the intelligent, nimble, and versatile means of stopping modern-day threats using behaviour and pattern recognition to find attacks that were not detected by traditional methods. AI detection, as part of artificial intelligence in cybersecurity, allows for a reduction in response times, increases accuracy, and encourages ongoing training to detect new threats.

Although there are some obstacles to overcome with AI detection, these can be addressed by being organised and following a procedure. The development of complex cyber threats is an ongoing process. Thus, the adoption of AI-based defence systems will give the ability to secure systems today, while supporting the ability to secure systems tomorrow as cyber threats evolve.

Partner with Qualysec to explore practical approaches for improving cybersecurity and AI integration.

Find Your Perfect Security Partner

FAQs

1. What is the process by which AI identifies previously unknown versions of malware?

Artificial Intelligence identifies previously unidentified variants of malware, as it may recognise suspicious behaviour of files, etc., based on any given system and internet traffic that may have compromised it, which may allow it to identify previously undetected system imbalances. The AI system has improved through continued training and refinement with new data and scanning for threats.

2. What is the main disadvantage of using AI for detection, rather than traditional signatures?

The dissimilarity between traditional “signature” detection and AI detection is most notable in how threats are identified. Traditional “signature” detection classifies files as either infected or not infected, which makes it incapable of detecting new variations of malware. In contrast, AI can analyse file activity as well as network anomalies. As a result, AI can classify new files with unknown signatures as potential threats. This capability enables AI to evaluate and detect risks more broadly than traditional detection methods.

3. Is it possible for AI to defend against ransomware?

Yes. Using its training, AI has been trained to detect unusual behaviours associated with ransomware, including rapid file encryption and unusual patterns of access to files. This enables AI to intervene and prevent ransomware from spreading through the system before any significant damage occurs. AI can also identify the earliest indicators of a compromise, allowing for quicker containment of any threats.

4. How effective is AI at detecting fileless malware?

AI detects it better than anything else because it concentrates on detecting behaviour rather than files. Because typical malware does not leave a file-based signature, it utilises runtime or behavioural analysis to detect memory-based attacks. Therefore, AI is a superior way of detecting fileless malware over signature-based solutions.

5. What are adversarial attacks on AI malware detection systems?

Adversarial attacks against AI systems use modified inputs that take advantage of weaknesses in the AI model and confuse the AI model into misclassifying the input. Attackers may utilise small changes to versions of malware to circumvent detection. Attackers can also attempt to affect a model negatively by injecting “poisoned” data during training. These types of attacks aim to evade AI detection of fileless malware.

6. Is AI able to identify cyber attacks?

AI is capable of identifying a wide variety of cyber attacks by monitoring for unusual activity in terms of user actions, network activity, and computer operations. AI can also identify specific types of activity that could indicate unauthorised usage or compromise of a system. As a result, it can also predict cyber attacks before they occur, strengthening the security posture of an organisation.

0 Comments